Asynchronous Logic Sign-Off Flows Beyond Structural CDC

SSO Symposium Case Study by Louis Cardillo of NVIDIA

Case Study Overview

Louis Cardillo of NVIDIA presented at Real Intent’s 2023 Static Sign-Off Symposium on how Nvidia enabled expanded its sign-off flow beyond structural clock domain crossing (CDC), to address more complex asynchronous design challenges. Meridian CDC with Simportal and Meridian RDC were deployed.

Below are lightly edited highlights of what he presented.

Synchronous vs. Asynchronous Design Logic Verification

Scale of Async logic usage

This is an 80 billion transistor chip, in Nvidia’s line that we would call GPUs.

From an asynchronous design sign-off point of view, it’s not our most complicated chip. We have SoCs with much higher clock domain counts and more varied IP sources; they have a lot more async reset domains and even more clock domains. Even though they might be a fifth or a seventh of the size of these, they’re actually quite a bit harder to sign off. Most of the flow is harder, but in particular, the asynchronous design flows are harder.

Being in a company with established flows and products means we’re not creating a new flow. We have a flow that’s existed for a long time and we’re continuously modifying it to make it better. And while we’re modifying it, we are taping out chips seven to eight times a year. There’s a huge variety of designs so these numbers are general. We have designs that have almost no async resets. We have designs that are almost all async resets

Large variation across top level blocks

10’s to 100’s of clock domain

100’s to >100K synchronizers

>1% to 70% flops with async resets

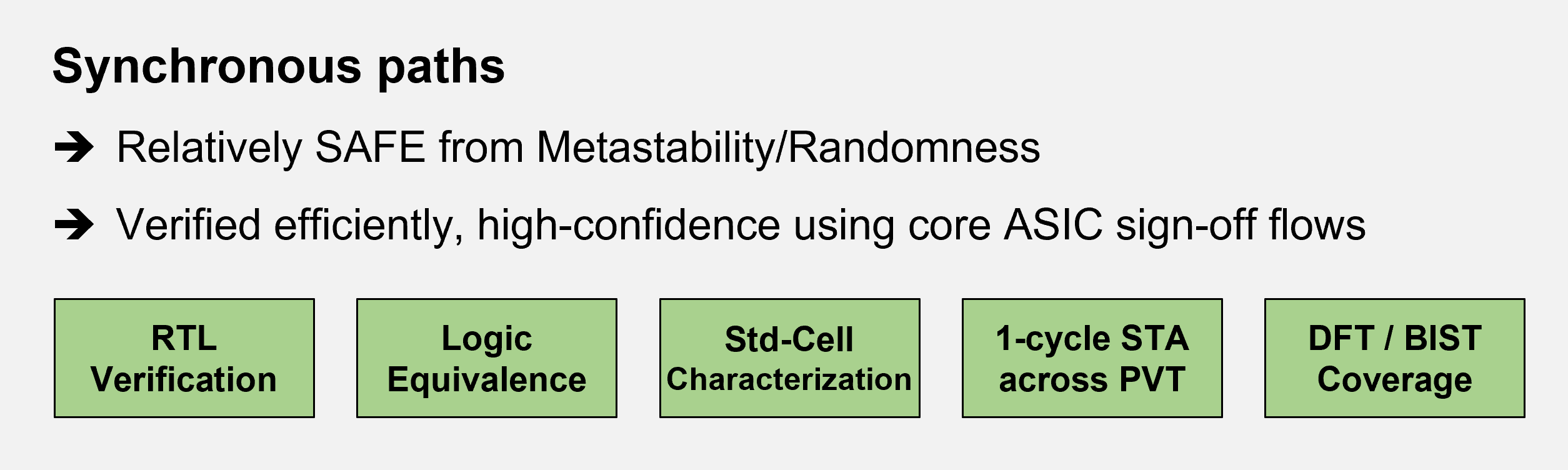

Synchronous paths are >99% of typical designs

Even a chip that has a lot of asynchronous logic is still 99 percent synchronous. That is a good thing because that’s much easier and more predictable; it is dependable to walk through a fairly straightforward ASIC flow and sign things off.

From a metastability randomness point of view, there’s no risk in in the normal flows if you are using single cycle signoff in a standard cell flow. Logic equivalence checking proves our netlist is the same, as long as we meet single cycle timing.

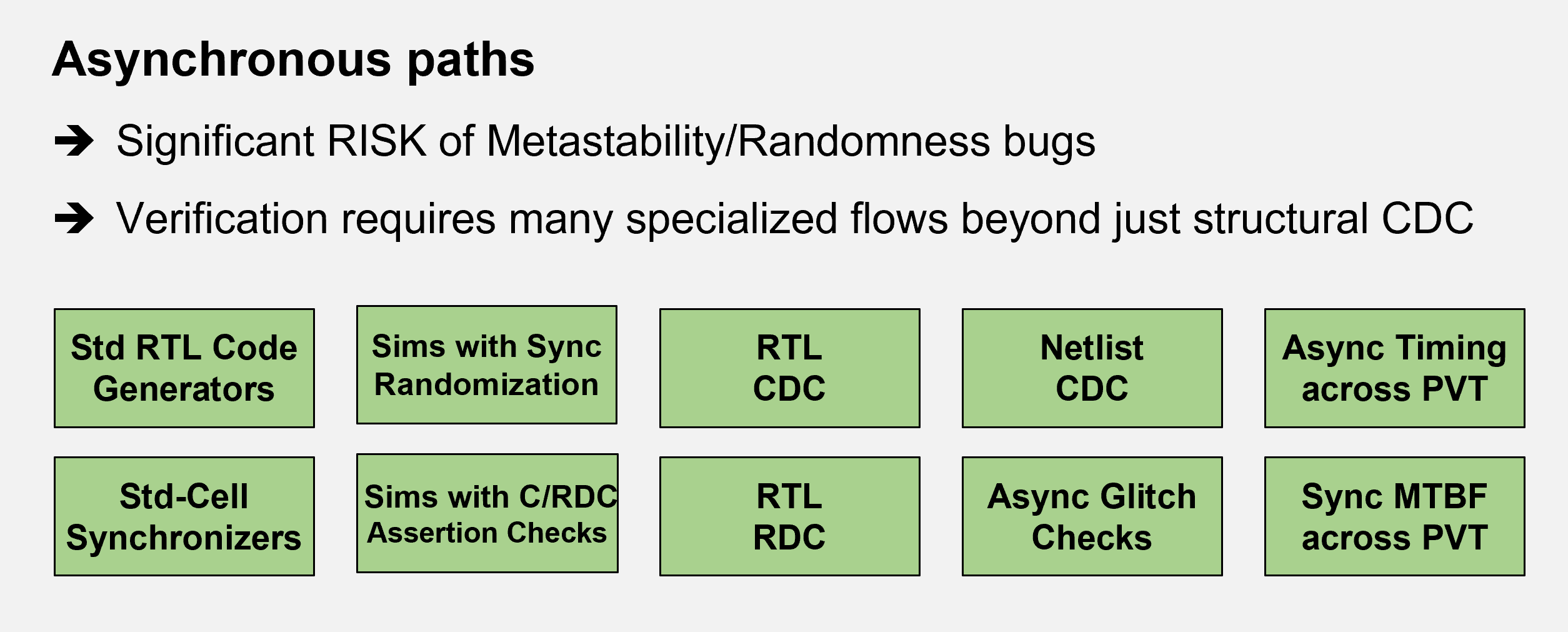

Asynchronous paths are <1% of typical designs

Those flows are very dependable and work very well for synchronous logic; but depending on how it is counted, a percent or so of the paths on the chip are asynchronous.

It’s very easy to make mistakes with asynchronous logic. Many of the assumptions people make about synchronous logic — things they just take for granted — aren’t going to be true. For example, even experienced design engineers will think of asynchronous sign-off as clock domain crossing (CDC) sign-off.

However, with CDC sign-off only, a team can get 100% coverage, but the chip still isn’t going to work unless they meet a number of other requirements. I discuss some of them below.

Meridian CDC and RDC workflows help reduce noise

I’d like to talk specifically about how engineers can use Meridian CDC and Meridian RDC sign-off flows to reduce noise.

1. Discourage waivers, encourage constraints

In general, it’s better for someone to specify that something is stable than to waive something because they know it’s stable. Tell the tool the truth and let it analyze that — sometimes there are other side effects of that.

There is a lot of value to specifying it as a constraint — the tool can sanity check it and do consistency checks.

A Meridian Simportal flow can help validate assumptions in simulation.

2. Run at different hierarchy levels

Another common technique is running at multiple hierarchy levels. We’re building workflows for RTL designers, and they want quick feedback. We don’t want to wait until chip assembly to give them feedback. We want to be able to run it at very small unit levels, then assemble those blocks as we go up to higher levels.

Someone asked me, “When do you run CDC and who runs it?” I answered, “The designer runs it. The person running regressions runs it. We run it in two different trees, the RTL tree and the physical design tree.”

This fits this whole left shift mentality. But it’s not like when we left shift, we stop running it on the right — we still run it later to catch downstream things such as DFT, ECOs. We’re just trying to find the bugs earlier.

3. Combine modes when it makes sense

Multi-mode analysis is actually really helpful and really effective for a lot of things. It matches the way our constraints look in the back end with multi-clock propagation.

But how do we get the right errors and warnings violations to the right designer?

These teams work on totally different schedules when they sign things off. We want to give them the control just to look at the bugs that they can fix.

Simulation Checks for CDC & RDC Assumptions

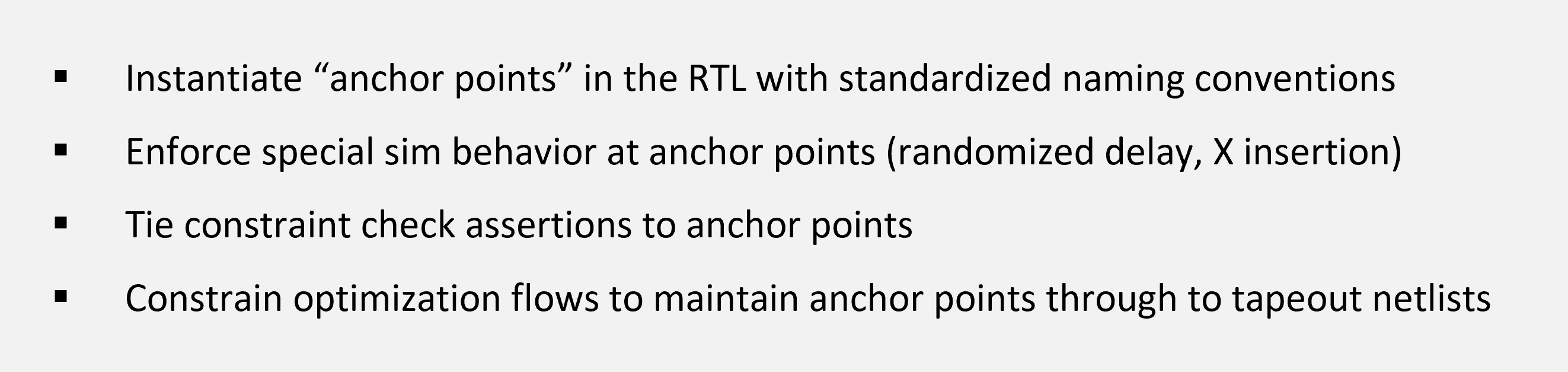

RTL simulations can be augmented to help verify CDC & RDC constraints & async logic behavior

There are some general principles that we’ve started to use more and more over time. One of these are anchor points in the RTL — we can tie special simulation behavior to them. When our back-end team looks at a violation, they can see that it is on this anchor point that existed in the in the front end.

Pointing them to the front-end analysis of the issue helps them deal with noise. Was it waived in the front end? Why was it waved? Who waved it? We used to and still do communicate a lot of this through emails and bug reports, but this is a way to tie it into the design. It’s part of our RTL. It’s part of our netlist.

Meridian Simportal generates simulation checkers to help verify CDC/RDC assumptions

Meridian Simportal is an extension of this idea. Simportal takes things where we don’t have anchor points and helps to answer why something that has passed CDC sign-off still fails.

For example, if a person said a signal was stable in a file in one place in one design review and we use that in CDC to propagate and knocked out 20,000 warnings .. what if that signal is not stable?

Asynchronous Resets

Async resets cause an explosion of async edges

We’ve been doing RDC-like checks for quite a while, but a lot of what we did in the past were straight structural checks or design rule type checks.

We evolved into wanting to treat our RDC tools and flows more like we do CDC.

Resets are dangerous — and they’ve become a lot more dangerous because of IP reuse. Designers would think “If I get through power-on reset on my chip, that’s all I have to worry about.” But now they put that IP on another chip and hook them together. Do this with a thousand IPs and they’ve got power-on resets that happen once, but a lot of these blocks are reset hundreds of times a second all over the chip. There are resets going on all the time, for different reasons for different chips.

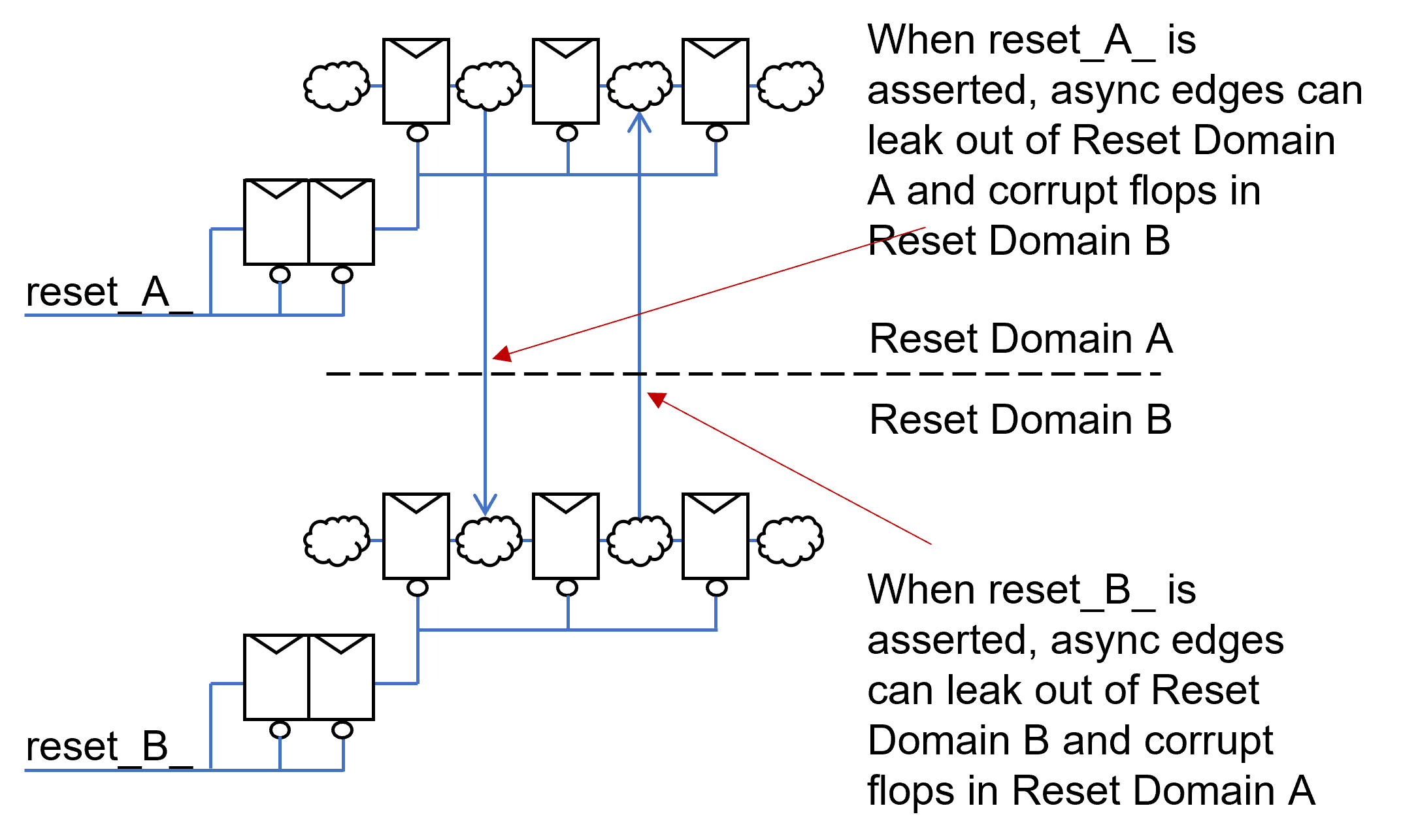

Resets can leak from one block to another if only one is reset one at a time.

In a nutshell, if it was a power on reset, they didn’t worry so much about the assert edge. But in in a chip with hundreds of these domains, the assert edge of reset is actually really dangerous. It asserts and all those flops are pushed into their state — and there is just a crazy explosion of asynchronous edges that are everywhere in the design. Every flop is sending out these asynchronous edges. If they hit any downstream flop that’s not also being reset and it’s being clocked, there are more async edges. Ultimately, the danger leaks out of the block that was intended to be reset.

All those things are fine. But no matter how hard we try to make a dependable trustworthy correct by construction design, we must then verify it.

RTL bugs found with Meridian RDC

That’s why we use RDC sign-off flows. Meridian RDC has grown for us; we’re using it much more now — in many cases everywhere that we run CDC.

It’s different because it’s within a clock domain, so even some things that you might not run CDC sign-off on you could still want to run RDC on if you have multiple reset domains.

These are the kind of bugs that we’ve actually found using Meridian RDC. A number we might find later — there’s also a left shift thing going on here — finding it early in RTL is better.

Common things we find are leaking async paths that are coming out, or you can have race conditions between resets, and see what they’re resetting. And then late in the design cycle we have ECOs; so, finding it early becomes quite valuable, so we don’t either miss it or not find it until the back end physical design.

How to Not Break a Chip

I will wrap up with a few broad statements on how to not break a chip from an async interface point of view.

- Use standard plugins whenever possible, don’t reinvent the wheel for async interfaces

- Run simulations with randomizing synchronizer models

- Run CDC and RDC early and often, and after late ECOs

- Check CDC and RDC constraints with simulation assertions

- Don’t assume that correct async logic in synthesized netlists stays that way in layout

- Check that synchronizer depths will meet MTBF goals across PVT

- Run async timing checks to cover async skew assumptions across PVT